You are here

v14.0 Optimized Builds - Part 2: Containers

Proxmox, OpenNode & Docker

Following close behind the Optimized Builds Part 1 announcement, I am happy to present Part 2: Container builds. Part 2 includes optimized container builds for:

The Proxmox builds are relatively generic so should also be useful for users of vanilla LXC or OpenVZ. Our (still in progress) v14.0 LXC appliance will leverage the Proxmox container builds.

TurnKey Containers

TurnKey container builds are essentially an archive containing the installed TurnKey Linux appliance filesystem and the kernel removed (containers leverage the host's kernel). All container builds support pre-seeding; otherwise inithooks will run interactively at first login. Some further notes are in the relevant Proxmox, OpenNode and Docker sections below.

Both Proxmox and OpenNode have internal mechanisms for downloading TurnKey container templates and most users will probably find that most convenient. Regardless, individual 14.0 Proxmox and OpenNode optimized container builds can be downloaded from their respective appliance pages (eg. LAMP, WordPress Node.js etc). Alternatively the entire library can downloaded via one of our mirrors.

Docker builds are a little different as they are (only) hosted on the Docker Hub. They are downloaded using the "docker pull" command. For example, LAMP:

docker pull turnkeylinux/lamp-14.0

Proxmox builds

The Proxmox builds were previously known as "openvz". However, as of v4.0, PVE have replaced OpenVZ with LXC (see here). The TurnKey container templates are aimed at PVE v4.0 (LXC) but also support PVE v3.4 (OpenVZ). The need for a new name seemed obvious so the "new" Proxmox build was born! :)

A significant tweak was required due to an incompatibility between the Debian Jessie version of SystemD (the initsystem in other v14.0 builds) inside an LXC container. So for the v14.0 Proxmox build we decided to replace SystemD with SysVInit (as used in v13.0).

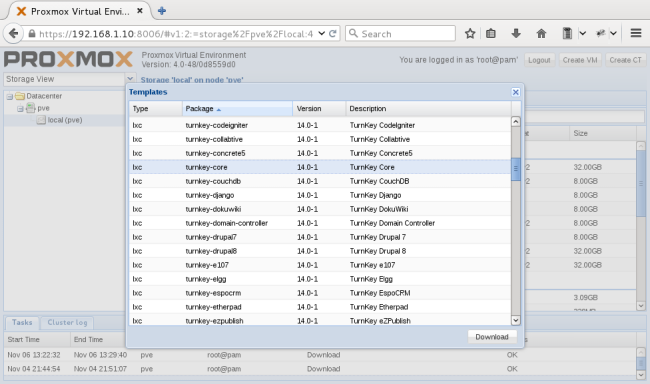

Alon has published the updated "TurnKey channel index" so PVE v4.0 users should already have them available for download. Get them now from within the webUI.

TurnKey v14.0 appliances ready for download within the Proxmox webUI

If for some reason the TurnKey templates aren't showing in your webUI (and you are running PVE v4.0) then try updating from the commandline:

pveam update

For PVE v3.4, TKL v14.0 templates will need to be manually downloaded. TurnKey v13.0 templates (openvz) should work on PVE v4.0 too although have not been extensively tested.

OpenNode builds

OpenNode builds use the Proxmox build as a base, so it too uses SysVInit (rather than SystemD). Where the OpenNode build deviates though is the way it is packaged. It is bundled as an OVA (not to be confused with the VM OVA build which contains a VMDK disk image!).

If you are using OpenNode and the appliances aren't available, then try updating the opennode-tui package:

yum --enablerepo=opennode-dev update opennode-tui

Or have a read on the OpenNode wiki.

Docker

Docker builds are a bit of an odd match with two hypervisor focussed builds. However they are a container! :)

The Docker build process has not changed significantly for v14.0. As per previous releases it is a full OS container. So the process to use them is essentially the same as v13.0.

So to use Core as an example (the steps are similar with other appliances):

To download Core:

docker pull turnkeylinux/core-14.0

To then launch Core interactively:

CID=$(docker run -i -t -d turnkeylinux/core-14.0)

CIP=$(docker inspect --format='{{.NetworkSettings.IPAddress}}' $CID)

docker logs $CID | grep "Random initial root password"

ssh root@$CIP

I hope you have an opportunity to try out some of these builds and let us know how you go. If you have feedback then please comment (below), use our forums and/or post bug reports and/or feature requests on our GitHub Issue tracker.

Comments

This is great news! Is it

This is great news! Is it possible for us to build our own container builds from the appliance source? There used to be a couple of tklPatches whiched worked on v13 to setup a build/iso for headless and preseeding. What is the recommended method if we want to create our own container from an appliance?

You can use Buildtasks

I have it cloned in /turnkey/ and using my repo to create a core container I would do this:

When it's done you should find a tar.gz in /mnt/container/

Note; you need to build to ISO first (e.g. ./bt-iso core) or otherwise it will download the default TurnKey ISO.

This looks promising.

This looks promising. However, I'm running into the error "bt-container: line 61: /usr/local/src/pfff/build/config/common.cfg: No such file or directory" Where do I find the recommended contents of this common.cfg file?

--EDIT--

Nevermind, running it a second time works. The first time it had to install cmake and pfff which must have messed up determining the BT_CONFIG variable.

Just realised that I had missed an important point.

Thanks for the tips

Thanks for the tips. I had actually already figured out from the source code that I needed to build the ISO first. I'll keep in mind that it needs a git repo for custom appliances.

I did figure out that I can re-name the ISO and as long as I keep the same basic name and pass my custom name, e.g. openldap-struebel as the appliance that the bt-container script will work quite happily with it. It works quite well to create containers of patched appliances which I generate as described below.

To generate a patched ISO, I hacked together a script based on the bt-iso script which will apply a tklpatch to the root.sandbox before generating the ISO. I'm currently using that with my tklpatches to customize the base appliances. If you'd like I can publish it for possible inclusion into the official buildtasks. It does require a patch to the tklpatch-extract-iso script to unsquash the root.sandbox over the product.rootfs if it exists in the ISO. Should I fork the tklpatch repo and submit a pull request with that patch?

Most of my patches are published on my GitHub account, although I don't think I've put my master patches up. There are a couple where I configure specific users that I don't want to publish publicly. The main reason for using the patch system is for maintainablilty since some of the patches I use for multiple appliances. I was thinking that to include it into the tkldev system it would be nice to allow a user common similar to the official common that could hold user-custom makefiles, overlays, scripts, etc. Possibly, I could just fork the turnkey common and create a separate branch, I'm just not super comfortable with the advanced usage of git, especially from the command line since I'm primarily a Windows user with TortoiseHg. What are your thoughts on supporting a user common vs just having us fork the turnkey common?

Sounds like an interesting approach

So, to rephrase myself: if you can extend buildtasks' flexibility without significantly altering it's current functionality I don't see any issue with that. I'd need to defer to Alon for final approval though (I don't have write privileges to buildtasks). So if it's not too much effort then perhaps do a PR. Then I'll have a quick look over it and and then get Alon to also have a look at it and give you some feedback. How does that sound?

I think that you need to contextualize more the concept.

Hi,

I think we lack of information about why to run the core of turnkey first?

how do we get the root password of the specific docker build that we need to use? for example wordpress or fileserver etc? is it the same than the root from the core? What if we have change the password root of the core?

You don't need to run core first

When you first launch the container it should provide you with inithooks screens which you complete. Part of that process sets the root account password (and other passwords you may need).

Yes

Hi Eric

Your other suggestion is a good one too. Although we probably should also note that ideally you shouldn't be launching Docker as root due to potential security concerns...

We really need to transfer all this info into our docs and then keep them up to date. Unfortunately there just aren't enough hours in the day to do maintenance, development, support and still keep on top of all of these somewhat lower priority jobs.

I've just opened an issue on our tracker cross referencing your post. I'm not sure when we'll get to it, but at least it won't get forgotten now...

Pages

Add new comment